As someone who spends his fair share of time doomscrolling on social media, I've personally seen how AI language models like ChatGPT are being exploited to spread disinformation and misleading narratives. It's been pretty mind-boggling, to be honest.

Even before the recent astronomical growth of Gen-AI, I remember over the last couple of years during the COVID-19 pandemic, my feeds were inundated with anti-vax posts that seemed convincingly written but were clearly trying to stoke fears about vaccine safety. It became clear later through various digital watchdogs that those posts were likely AI-generated based on their unnatural prose and the sheer volume.

That's the really insidious thing - unlike cheap disinformation bots of the past that were easy to sniff out, the latest AI disinformation is polished enough to fool many people. I've fallen for it myself a few times before realising the "facts" seemed just a little too on-the-nose.

Don't get me wrong, as a futurist you wouldn’t find a bigger advocate for exciting new technologies like Gen-AI than me, specially when it can have a real tangible impact on my productivity – today. But bad actors are already finding ways to weaponise it against us. ChatGPT and other similar services are based on a sophisticated language model capable of generating human-like text. Disinformation agents can use it to create convincing fake news articles, misleading social media posts, and even entire narratives designed to deceive.

Just look at the nightmare that was Stable Diffusion getting abused for deepfakes and fake nudes last year. These generative AI models are a double-edged sword - they can be incredibly useful tools, but also potent weapons if misused with malicious intent.

The potential implications of this disinformation are quite worrying when you consider it. Misinformation campaigns risk undermining trust in our institutions like the NHS and democratic bodies. We've already seen how conspiracy theories and falsehoods about Brexit and the EU referendum process sowed division and turmoil.

During the pandemic, I couldn't scroll through my social feeds without encountering dubious anti-vax posts and hoax treatments shared by apparently random members of the public. According to research by the Reuters Institute, a staggering 60% of UK internet users saw false or misleading info about COVID-19 spread online.

Just think about the public health ramifications of all that propaganda fueling vaccine hesitancy and strange cure myths. It likely contributed to the UK's struggle with vaccine uptake compared to other European nations. Disinformation isn't some abstract threat - it had real-world impacts that made an already tough situation even worse for our front-line workers.

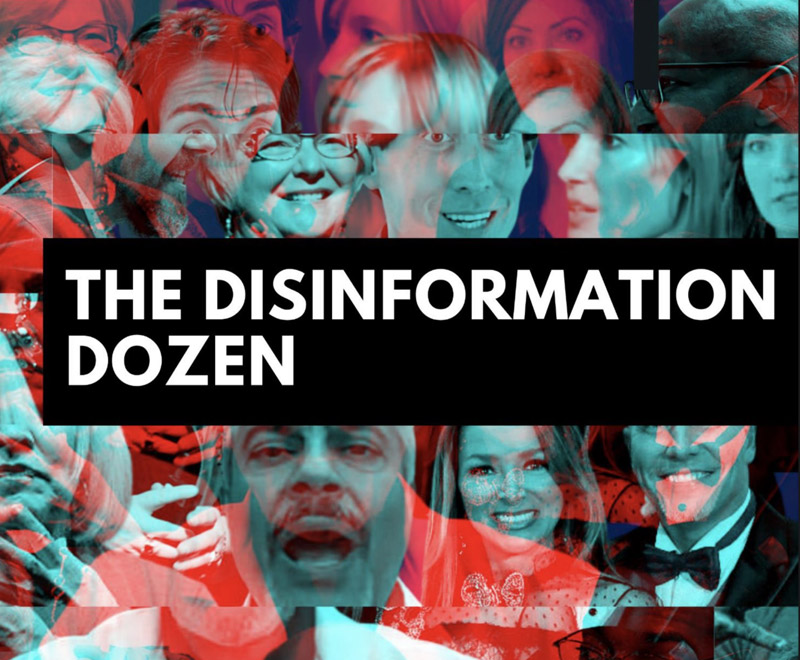

"During the COVID-19 pandemic, The Disinformation Dozen - were 12 social media accounts responsible for using AI to generate over 65% of anti-vaccine content being published on social media."

The exploitation of AI to generate disinformation has reared its ugly head again in recent conflicts like the Russia-Ukraine war and the Israel-Palestine crisis. There were multiple deepfake videos of leaders like Zelenskyy appearing to surrender, and harrowing but completely fabricated images of civilian casualties designed to provoke outrage.

While individual cases were quickly debunked by fact-checkers, the speed and realism with which this AI-enabled disinformation spread online should set off alarm bells. We're seeing coordinated campaigns and bot networks amplifying this synthetic media at a frightening scale to sow confusion and discord.

As we gear up for major elections in 2024 across the US, UK, Europe, and India, you can bet nation-states and bad actors are preparing to unleash AI-generated propaganda and fake narratives to mislead voters and inflame social tensions. From what I've seen, the disinformation peddlers are often outpacing our ability to detect their AI trickery.

If we don't get serious about a multi-pronged counterattack involving advanced AI detection tools, sensible regulation, and beefing up digital literacy programs, I dread to think about the havoc these AI fakes could wreak on our democracies and social fabric. Staying vigilant and questioning everything we see online has never been more crucial as this disturbing use of otherwise amazing technology grows more potent by the day. The future's bright, but we need to get ahead of those trying to twist AI into a dystopian mind-bending weapon.

So what can we do about this looming disinformation crisis? I think it has to be a multi-pronged approach:

1. Enhanced AI Detection Tools

The major tech firms are thankfully taking this threat seriously, with Meta (Facebook) and Google spearheading efforts to develop advanced AI systems to detect and remove disinformation at scale. Twitter has also recently doubled down after being criticised for its delayed response.

Meta in particular has made notable strides, investing £19 million into its so-called "Red Team" tasked with probing its AI systems for vulnerabilities that malicious actors could exploit to spread false narratives and propaganda. Their automated tools analyse linguistic patterns, account behaviour, and data signals to flag potential coordinated disinformation campaigns before they gain traction.

Just last month, these detection systems helped uncover and dismantle a Russian-backed operation using a network of fake accounts across Facebook, Instagram, and Twitter to stoke tensions around the Ukraine war. While not a silver bullet, ironically, AI itself is a powerful defence against other AI-generated disinformation.

2. Public Education

But we can't simply rely on these AI filters to catch everything. Teaching people to be more discerning about what they consume and share online is paramount. The UK government has thankfully made digital literacy a bigger priority in recent years.

Programmes initiated by the likes of the National Literacy Trust provide educational resources to help people of all ages spot unreliable sources, check claims against authoritative sites, and understand how bad actors use emotional manipulation tactics and cherry-picked data to mislead.

However, there's still a long way to go in improving public resilience against false and misleading content online according to a recent Ofcom report. Over a quarter of UK adults struggle to identify misinformation, and that number jumps to over 40% for those aged 65 and up.

"Over a quarter of UK adults struggle to identify misinformation, and that number jumps to over 40% for those aged 65 and up."

3. Regulation and Policy

Robust regulation of online platforms that empowers users and enforces transparency will also play a vital role going forward. The UK has been fairly proactive on this front compared to other nations.

The upcoming Online Safety Bill grants Ofcom broader powers to demand tech giants rapidly remove illegal and harmful content like disinformation campaigns that could spark violence or undermine public health efforts. Major platforms will also have to conduct regular risk assessments and be more transparent about their content moderation policies.

Over in the EU, the landmark Digital Services Act (DSA) is also set to have global implications once it’s fully implemented. It sets comprehensive new rules requiring accountability and disclosures from companies like Meta and Google regarding their recommendation algorithms, ad targeting systems, and other machine learning models that have been abused to amplify disinformation in the past.

The DSA also mandates robust fact-checking for viral content and empowers users with more options to flag problematic posts. Tech firms face hefty fines if they don't take sufficient action. Between this and the UK's Online Safety framework, there's finally some regulatory teeth to curb the darker applications of AI.

4. Industry Efforts

Of course, major players in the tech industry itself also have a key part to play in self-regulation and implementing rigorous integrity standards. Microsoft in particular has taken a firm stance, recently announcing it will no longer use AI language models in any of its consumer products or services until robust built-in safeguards against misuse can be developed and audited.

UK-based firms like DeepMind and Anthropic are following suit by implementing strict ethical AI principles from the ground up, like watermarking AI outputs to improve traceability and capping capabilities that could enable malicious deepfakes or hate speech generation. It's an encouraging sign the industry itself recognises these existential risks to public trust.

At the end of the day, platforms like Google, Meta (covering Facebook, Instagram and WhatsApp) and X are the main battlegrounds for this disinformation war. Their content moderation policies and AI detection capabilities could make or break the effectiveness of these campaigns.

For all that is wrong with Facebook, I do try and cut them some slack in this space, as certainly from a tech observer perspective, they've been driving a lot of the developments in AI systems to detect fake accounts, coordinated inauthentic behaviour, and flagged misinformation. Having said that, my goodness, a lot of hogwash still slips through the cracks on their platforms!

The exploitation of powerful AI tools by disinformation peddlers is a significant threat that we're still just coming to terms with. While the benefits of AI are immense, we have to get serious about addressing these emerging risks to society.

Through better AI detection, teaching critical thinking skills, and sensible regulation, we can hopefully stay ahead of those trying to manipulate us with AI-generated propaganda and fake narratives. The future of technology is bright, but we need responsible development and oversight to avoid its twisted misuse.

By staying vigilant and questioning what we consume online, we can all play a part in combating the spread of AI-driven disinformation. I know I am already double-checking my news sources before hitting that share button!

Usman is a digital veteran and a renowned expert in human-centric innovation and product design. As the founder of Pathfinders, Usman works directly with businesses to uncover disruptive opportunities and helps create tangible business value through design and innovation.

Book an initial consultation with Usman »